Solar cars still a way off

Toyota's third-generation Prius, due at dealerships this spring, will have an optional solar panel on its roof. The panel will power a ventilation system that can cool the car without help from the engine, Toyota says.

But it's a long way from the 2010 Prius to a solar-powered car, experts told CNN. Most agree that there just isn't enough space on a production car to get full power from solar panels.

"Being able to power a car entirely with solar is a pretty far-reaching goal," said Tony Markel, a senior engineer at the federal government's National Renewable Energy Lab in Golden, Colorado.

In the new Prius, the solar panel will provide energy for a ventilation fan that will help cool the parked car on sunny, hot days. The driver can start the fan remotely before stepping into the car. Once the car is started, the air conditioning won't need as much energy from a battery to do the rest of the cooling.

"The best thing about using solar is that regardless of what you end up using it for, you're trying to use it to displace gasoline," added Markel.

The question is, how much gasoline can solar power offset? Markel said his lab has modified a Prius to use electricity from the grid for its main batteries and a solar panel for the auxiliary systems. He believes the car gets an additional 5 miles of electric range from the panel.

According to recent articles in Japan's Nikkei newspaper, Toyota has bigger plans for harnessing power from the sun. Nikkei reports that Toyota hopes to develop a vehicle powered entirely by solar panels. The project will take years, the paper reported.

When contacted by CNN, however, a Toyota spokeswoman denied the existence of the project.

"At this time there are no plans that we know of to produce a concept or production version of a solar-powered car," said Amy K. Taylor, a communications administrator in Toyota's Environmental, Safety & Quality division.

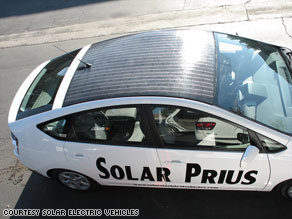

Motorists don't have to wait for a 2010 Prius to drive a solar-enhanced car, however. Greg Johanson, president of Solar Electric Vehicles in Westlake Village, California, said his company makes a roof-mounted panel for a standard Prius that enables the car to travel up to 15 additional miles a day.

The system costs $3,500, and it takes about a week to make one, Johanson said. Billy Bautista, a project coordinator at the company, said Solar Electric Vehicles gets so many requests for the system that there is a backlog of several months.

The company's Web site says motorists can install the panels themselves, although it recommends finding a "qualified technician."

The system delivers about 165 watts of power per hour to an added battery, which helps powers the electric motor, Johanson said.

But others said it would take a lot more power than that to replace an internal combustion engine.

Eric Leonhardt, director of the Vehicle Research Institute at Western Washington University, said that even if solar cells worked far better than they do today, they wouldn't generate enough power for driving substantial distances. The best cells operate at about 33 percent efficiency, but the ones used on vehicles are only about 18 percent efficient, he said.

Leonhardt said it would be more practical to use solar power to help charge a car's battery and use the more efficient panels mounted on a roof or over a parking area to supply the rest of the electricity needed to drive the engine.

"Solar panels really need a lot of area," he said.

Leonhardt thinks Toyota's new Prius is a good first step toward using renewable energy. Some cars get hotter than 150 degrees inside when parked in the sun, so reducing the temperature could mean Toyota could use a smaller AC unit, he added.

Johanson of Solar Electric Vehicles said he'd like to see Toyota bring the weight of a Prius down from 3,000 pounds to 2,000. He also hopes for a small gasoline engine and a larger electric motor. That will probably come in the future, when Toyota unveils a plug-in engine.

In the meantime, Solar Electric Vehicles sells its version of a plug-in Prius, with a solar panel installed, for $25,000, Bautista said.

Toyota is the largest automaker to incorporate solar power into a mass-produced car. But its solar panel is not the first for a car company. Audi uses one on its upscale A8 model, and Mazda tried one on its 929 in the 1990s.

In addition, a French motor company, Venturi, has produced an electric-solar hybrid. The Eclectic model costs $30,000, looks like a souped-up golf cart and uses roof-mounted solar panels to help power an electric engine. It has a range of about 30 miles and has a top speed of about 30 mph.

ABOUT Solar vehicle

Borealis III leads the way during the 2005 North American Solar Challenge passing by Lake Benton, Minnesota.A solar vehicle is an electric vehicle powered by a type of renewable energy, by solar energy obtained from solar panels on the surface (generally, the roof) of the vehicle. Photovoltaic (PV) cells convert the Sun's energy directly into electrical energy. Solar vehicles are not practical day-to-day transportation devices at present, but are primarily demonstration vehicles and engineering exercises, often sponsored by government agencies.

Solar cars

Solar cars combine technology typically used in the aerospace, bicycle, alternative energy and automotive industries. The design of a solar vehicle is severely limited by the energy input into the car (batteries and power from the sun). Virtually all solar cars ever built have been for the purpose of solar car races (with notable exceptions).

Like many race cars, the driver's cockpit usually only contains room for one person, although a few cars do contain room for a second passenger. They contain some of the features available to drivers of traditional vehicles such as brakes, accelerator, turn signals, rear view mirrors (or camera), ventilation, and sometimes cruise control. A radio for communication with their support crews is almost always included.

Solar cars are often fitted with gauges as seen in conventional cars. Aside from keeping the car on the road, the driver's main priority is to keep an eye on these gauges to spot possible problems. Cars without gauges available for the driver will almost always feature wireless telemetry. Wireless telemetry allows the driver's team to monitor the car's energy consumption, solar energy capture and other parameters and free the driver to concentrate on just driving.

Electrical and mechanical systems

The electrical system is the most important part of the car's systems as it controls all of the power that comes into and leaves the system. The battery pack plays the same role in a solar car that a petrol tank plays in a normal car in storing power for future use. Solar cars use a range of batteries including lead-acid batteries, nickel-metal hydride batteries (NiMH), Nickel-Cadmium batteries (NiCd), Lithium ion batteries and Lithium polymer batteries.

Many solar race cars have complex data acquisition systems that monitor the whole electrical system while even the most basic cars have systems that provide information on battery voltage and current to the driver.

The mechanical systems of a solar car are designed to keep friction and weight to a minimum while maintaining strength. Designers normally use titanium and composites to ensure a good strength-to-weight ratio.

Solar cars usually have three wheels, but some have four. Three wheelers usually have two front wheels and one rear wheel: the front wheels steer and the rear wheel follows. Four wheel vehicles are set up like normal cars or similarly to three wheeled vehicles with the two rear wheels close together.

Pharr is considering a $500,000 project that would blanket the city with a wireless Internet system geared toward serving city workers and emergency responders.

Pharr is considering a $500,000 project that would blanket the city with a wireless Internet system geared toward serving city workers and emergency responders.